LiteLLM

LLM is very useful to connect fo multiple AI API over one interface, in this case, Open WebUI.

to follow these step I assume you have Open WebUI installed already.

Step 1. Install LiteLLM

Install LitelLLM

git clone https://github.com/BerriAI/litellm

Create an .env file and add 2 lines to it:

cd litellm

vim .env

Enter any randomly generated keys, starting with sk-. So for example:

LITELLM_MASTER_KEY="sk-62N*c#GNAaQnpZO"

LITELLM_SALT_KEY="sk-WwApZ#lcRHZfI%50"

The LITELLM_MASTER_KEY you will need to login litellm later The LITELLM_SALT_KEY will be used in the background to encrypt other keys

Next step is to to start Litellm

docker compose up -d

You show now see the docker container are running, and the default of 4000 to access:

$ docker ps ✔

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

75c5f4c779eb ghcr.io/berriai/litellm:main-stable "docker/prod_entrypo…" 5 minutes ago Up 5 minutes (unhealthy) 0.0.0.0:4000->4000/tcp, [::]:4000->4000/tcp litellm-litellm-1

6bedd6008ec5 prom/prometheus "/bin/prometheus --c…" 5 minutes ago Up 5 minutes 0.0.0.0:9090->9090/tcp, [::]:9090->9090/tcp litellm-prometheus-1

b8b9b0b80ab5 postgres:16 "docker-entrypoint.s…" 5 minutes ago Up 5 minutes (healthy) 0.0.0.0:5432->5432/tcp, [::]:5432->5432/tcp litellm_db

Step 2. Login Litellm

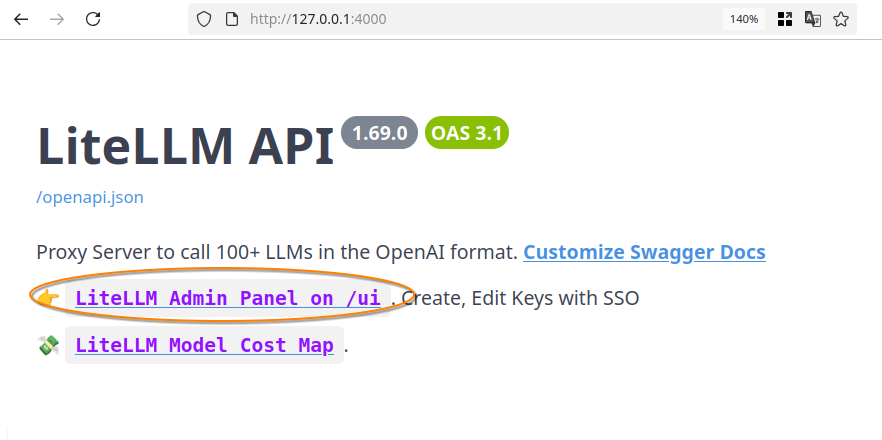

Open http://127.0.0.1:4000/ on your browser and click on LiteLLM Admin Panel on /ui and login with user admin and the password you set up in the .env file as LITELLM_MASTER_KEY.

Step 3. Add models

In the Litellm admin panel, go to Models -> Add new model and add model like OpenAI, Anthropic, Groq or any other you want.

You will need to have an account and the API Keys for any of these providers.

Step 4. Create Virtual Keys

In the Litellm admin panel, go to Virtual Keys, Create New key. You can optional add Budgets to this key.

Step 5. Add API Virtual Key to Open WEBUI

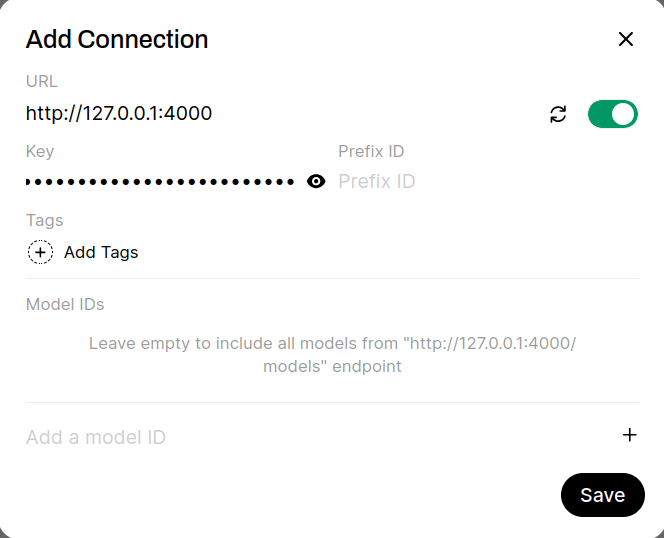

Go back to Open WebUI and under Settings -> Connections -> Mange OpenAI API Connections click on Add Connection